Artificial intelligence (AI) has quickly become one of the most transformative forces in modern technology. From its early conceptualization by AI pioneer John McCarthy to today’s tech giants like Elon Musk advocating for AI safety, the role of AI in shaping the future is undeniable. AI is more than just clever computer programs; it’s about machines learning, thinking, and making decisions much like humans. But with great power comes great responsibility, and that’s where standards like AS ISO/IEC 42001:2023 come in.

This global standard serves as a framework to ensure that AI is developed and used ethically, transparently, and responsibly across all sectors. From preventing AI mishaps to fostering trust in its deployment, ISO/IEC 42001 is key to navigating the rapidly evolving AI landscape.

What is AS ISO/IEC 42001:2023?

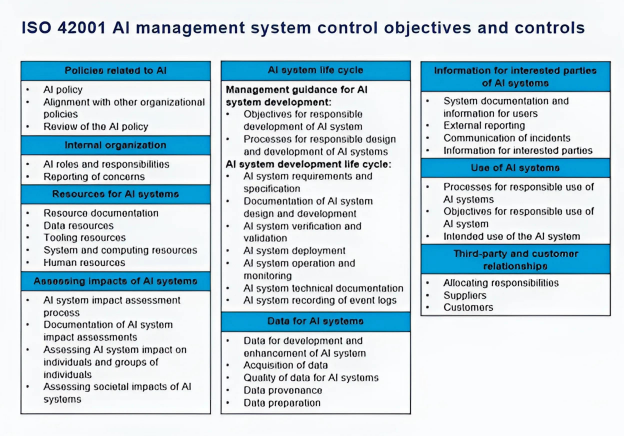

AS ISO/IEC 42001:2023 is an international management system standard (MSS) tailored specifically for AI technologies. It outlines essential steps for organizations to implement, manage, and continuously improve AI systems in a way that fosters trust, accountability, and transparency. Just like standards in Quality Management (ISO 9001) and Information Security (ISO 27001), ISO/IEC 42001 plays a crucial role in ensuring responsible AI governance.

Why We Need AI Standards

AI is not just another piece of software. Its self-learning and decision-making abilities introduce unique risks like data poisoning, model inversion attacks, and biased outcomes. As Elon Musk rightly pointed out, ignoring AI safety is akin to ignoring regulations for cars or airplanes—public safety should be paramount. AI systems need clear governance to avoid unintended consequences and ensure that their use aligns with societal values.

Without proper oversight, AI can easily become an unpredictable force, eroding trust and causing more harm than good. The ISO/IEC 42001 standard helps organizations address these risks, ensuring that AI is used for the greater good while maintaining ethical integrity.

Key Benefits of ISO/IEC 42001:2023

- Cultivating Trustworthy AI Systems: ISO/IEC 42001 promotes trustworthy AI by enforcing transparency and accountability. Through techniques like algorithmic transparency and explainability, the standard ensures that AI systems are reliable and predictable.

- Embracing Ethical AI: The standard focuses on integrating ethical frameworks into AI development, ensuring fairness and inclusivity. By leveraging advanced machine learning fairness algorithms, it fosters an environment where AI decisions reflect ethical values.

- Risk Identification and Mitigation: ISO/IEC 42001 equips organizations with tools for risk assessment, such as threat modeling and probabilistic analysis. These practices help mitigate risks related to AI’s complex, self-learning nature.

- Regulatory Compliance: The standard ensures that organizations comply with data privacy regulations by implementing robust data governance frameworks. Techniques like differential privacy and federated learning further enhance data protection.

- User Confidence: By incorporating user-centric design principles, the standard boosts user trust. Through sentiment analysis and user feedback processing, it ensures that AI systems align with user needs and expectations, prioritizing their well-being and safety.

- Strengthening Organizational Reputation: Organizations that adopt ISO/IEC 42001 demonstrate leadership in ethical AI practices. This strengthens their reputation and fosters trust among stakeholders, providing a competitive advantage.

ISO/IEC 42001 Structure

The ISO/IEC 42001 framework follows the classic Plan-Do-Check-Act (PDCA) cycle, which is familiar to many in the world of quality and process management:

- Plan: Define the context, leadership commitment, and scope of the AI management system (AIMS). This stage includes stakeholder analysis and developing AI policies.

- Do: Focus on support and operation. This involves allocating resources, managing data, and ensuring AI systems are continuously monitored and assessed for risks.

- Check: Regularly evaluate the performance of the AI management system through monitoring, audits, and management reviews.

- Act: Continuously improve AI processes and systems by identifying and addressing non-conformities and taking corrective actions.

This structure ensures that AI systems are managed systematically, aligning with other standards such as ISO 9001 (quality management) and ISO 27001 (information security), creating a comprehensive approach to AI governance.

The Global Importance of AI Standards

AI is shaping the future in ways that few other technologies can. But as it advances, so do concerns about its ethical and safe use. From John McCarthy’s vision of intelligent machines to Elon Musk’s warnings about AI safety, it’s clear that robust governance is necessary.

ISO/IEC 42001 fills this critical gap by establishing a global framework that helps organizations manage the risks associated with AI technologies. It ensures that AI systems are transparent, accountable, and aligned with both regulatory standards and ethical principles. Trust, as the cornerstone of AI adoption, is non-negotiable. Without trust, the widespread adoption of AI could be stifled, delaying innovations that have the potential to change industries and improve lives.

This standard is not just about mitigating risks—it’s also about ensuring that AI systems are inclusive, fair, and responsible. By adopting ISO/IEC 42001, organizations signal to their customers and stakeholders that they are committed to ethical AI practices and ready to lead in the rapidly evolving AI landscape.

Conclusion

AI has the potential to be either a superhero or an arch-nemesis, depending on how we choose to govern it. While the idea of an AI rebellion might sound like the plot of a sci-fi movie, the reality is that unchecked AI development could have serious consequences. That’s why adopting standards like AS ISO/IEC 42001:2023 is crucial.

This global standard provides organizations with a framework for the safe and ethical use of AI, fostering transparency, mitigating risks, and strengthening trust between organizations and their stakeholders. In a world increasingly driven by AI, adhering to these standards ensures that we harness its full potential responsibly while avoiding the pitfalls of unregulated growth.

Prime Infoserv plays a vital role in guiding organizations through the complexities of AI governance and compliance. By helping implement these standards, Prime Infoserv ensures that businesses not only meet regulatory requirements but also build trust with their customers and partners. As we continue to explore the capabilities of AI, Prime Infoserv is here to put the right safeguards in place—because when it comes to AI, trust isn’t just a nice-to-have; it’s essential for a future we can all rely on.

For a deeper dive into AI governance and compliance, feel free to connect at info@primeinfoserv.com.